Setting Up Ollama and Running DeepSpeed on Linux

Introduction

Ollama is a powerful tool for running large language models efficiently on local hardware. When combined with DeepSpeed, a deep learning optimization library, it enables even more efficient execution, particularly for fine-tuning and inference. In this guide, we will walk through the setup process for both Ollama and DeepSpeed on a Linux system.

Prerequisites

Before proceeding, ensure that your system meets the following requirements:

-

A Linux-based operating system (Ubuntu 20.04 or later recommended)

-

A modern NVIDIA GPU with CUDA support

-

Python 3.8 or later

-

Pip and Virtualenv installed

-

Sufficient storage and RAM for model execution

Step 1: Installing Ollama

Ollama provides an easy-to-use interface for managing large language models. To install it on Linux, follow these steps:

1. Open a terminal and update your system:

sudo apt update && sudo apt upgrade -y

2. Download and install Ollama:

curl -fsSL https://ollama.ai/install.sh | sh

3. Verify the installation:

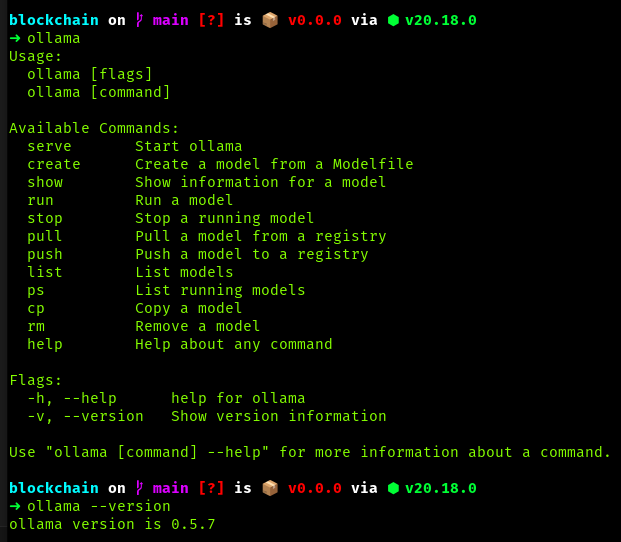

ollama --version

If the installation was successful, you should see the version number displayed.

Step 2: Setting Up DeepSpeed

DeepSpeed optimizes deep learning models for better performance and scalability. To install and configure it:

1. Create and activate a Python virtual environment:

python3 -m venv deepspeed_env

source deepspeed_env/bin/activate

2. Install DeepSpeed and required dependencies:

pip install deepspeed torch transformers

3. Verify the installation:

deepspeed --version

Step 3: Running a Model with Ollama and DeepSpeed

Now that we have both tools installed, we can load a model and test it.

1. Pull a model with Ollama:

ollama pull mistral

This downloads the Mistral model, which we will use for testing.

2. Run inference with Ollama:

ollama run mistral "Hello, how are you?"

If successful, the model should generate a response.

3. Use DeepSpeed to optimize inference (example using a Hugging Face model):

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

import deepspeed

model_name = "meta-llama/Llama-2-7b"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.float16)

ds_model = deepspeed.init_inference(model, dtype=torch.float16, replace_with_kernel_inject=True)

prompt = "What is DeepSpeed?"

inputs = tokenizer(prompt, return_tensors="pt").to("cuda")

outputs = ds_model.generate(**inputs)

print(tokenizer.decode(outputs[0]))

Example : Deepseek-r1 1.5b

Conclusion

By installing Ollama and DeepSpeed on Linux, you can efficiently run and optimize large language models. This setup enables users to leverage local hardware for AI model execution, reducing dependency on cloud services. If further fine-tuning or model adaptation is required, both tools provide advanced functionalities to enhance performance.